Recognizing International Women’s Day and Diversity in Tech

Originally created for the needs of Dask, we have spun out a general file system implementation and specification, to provide all users with simple access to many local, cluster, and remote storage media. Dask and Intake have moved to use this new package: fsspec.

For context, we are talking about the low-level business of getting raw bytes from some location. We are used to doing that on a local disk, but communicating with other storage mechanisms can be tricky, and certainly different in every case. For example, consider the different ways you would go about reading files from Hadoop, a server for which you have SSH credentials, or for a cloud storage service like Amazon S3. Since these are important to answer when dealing with big data, we developed code to complement Dask just for the job, and released packages like s3fs and gcsfs.

We found that those packages, which were built and released standalone, were popular even without Dask, partly because they were being used by other PyData libraries such as pandas and xarray. So we realised that the general idea of dealing with arbitrary file systems, as well as helpful code to map URLs to bytes, should not be buried in Dask, but should be made open and available to everyone, even if they are not interested in parallel/out-of-core computing.

Consider the following lines of code (which uses s3fs):

<span class="pl-k">>></span><span class="pl-k">></span> <span class="pl-k">import</span> fsspec <span class="pl-k">>></span><span class="pl-k">></span> of <span class="pl-k">=</span> fsspec.open(<span class="pl-s"><span class="pl-pds">"</span>s3://anaconda-public-datasets/iris/iris.csv<span class="pl-pds">"</span></span>, <span class="pl-v">mode</span><span class="pl-k">=</span><span class="pl-s"><span class="pl-pds">'</span>rt<span class="pl-pds">'</span></span>, <span class="pl-v">anon</span><span class="pl-k">=</span><span class="pl-c1">True</span>) <span class="pl-k">>></span><span class="pl-k">></span> <span class="pl-k">with</span> of <span class="pl-k">as</span> f: <span class="pl-c1">...</span> <span class="pl-c1">print</span>(f.readline()) <span class="pl-c1">...</span> <span class="pl-c1">print</span>(f.readline()) <span class="pl-c1">5.1</span>,<span class="pl-c1">3.5</span>,<span class="pl-c1">1.4</span>,<span class="pl-c1">0.2</span>,Iris<span class="pl-k">-</span>setosa <span class="pl-c1">4.9</span>,<span class="pl-c1">3.0</span>,<span class="pl-c1">1.4</span>,<span class="pl-c1">0.2</span>,Iris<span class="pl-k">-</span>setosa

This parsed a URL and initiates a session to talk with AWS S3, to read a particular key in text mode. Note that we specify that this is an anonymous connection (for those that don’t have S3 credentials, since this data is public). The object of is a serialisable OpenFile, which only communicated with the remote service within a context; but f is a regular file-like object which can be passed to many python functions expecting to use methods like readline(). The output is two lines of data from the famous Iris Dataset.

This file is stored uncompressed, and can be opened in random-access bytes mode.

This allows seeking to and extracting smaller parts of a potentially large file without having to download the whole thing. When dealing with large data, this is useful for both local data exploration, as well as parallel processing in the cloud (which is exactly how Dask uses fsspec).

Now compare with the following:

<span class="pl-k">>></span><span class="pl-k">></span> of <span class="pl-k">=</span> fsspec.open(<span class="pl-s"><span class="pl-pds">"</span>https://datahub.io/machine-learning/iris/r/iris.csv<span class="pl-pds">"</span></span>) <span class="pl-k">>></span><span class="pl-k">></span> <span class="pl-k">with</span> of <span class="pl-k">as</span> f: <span class="pl-c1">...</span> <span class="pl-c1">print</span>(f.readline()) <span class="pl-c1">...</span> <span class="pl-c1">print</span>(f.readline()) <span class="pl-c1">...</span> <span class="pl-c1">print</span>(f.readline()) sepallength,sepalwidth,petallength,petalwidth,<span class="pl-k">class</span> <span class="pl-c1">5.1</span>,<span class="pl-c1">3.5</span>,<span class="pl-c1">1.4</span>,<span class="pl-c1">0.2</span>,Iris<span class="pl-k">-</span>setosa <span class="pl-c1">4.9</span>,<span class="pl-c1">3.0</span>,<span class="pl-c1">1.4</span>,<span class="pl-c1">0.2</span>,Iris<span class="pl-k">-</span>setosa

This uses a different backend for HTTP locations, but has exactly the same API. (The output is slightly different because this version of the dataset includes a header line.)

Alternatively, you can work with file system instances, which have all of the methods that you would expect, inspired by the builtinos module:

<span class="pl-k">>></span><span class="pl-k">></span> fs <span class="pl-k">=</span> fsspec.filesystem(<span class="pl-s"><span class="pl-pds">'</span>s3<span class="pl-pds">'</span></span>, <span class="pl-v">anon</span><span class="pl-k">=</span><span class="pl-c1">True</span>)

<span class="pl-k">>></span><span class="pl-k">></span> fs.ls(<span class="pl-s"><span class="pl-pds">'</span>anaconda-public-datasets<span class="pl-pds">'</span></span>)

[<span class="pl-s"><span class="pl-pds">'</span>anaconda-public-datasets/enron-email<span class="pl-pds">'</span></span>,

<span class="pl-s"><span class="pl-pds">'</span>anaconda-public-datasets/fashion-mnist<span class="pl-pds">'</span></span>,

<span class="pl-s"><span class="pl-pds">'</span>anaconda-public-datasets/gdelt<span class="pl-pds">'</span></span>,

<span class="pl-s"><span class="pl-pds">'</span>anaconda-public-datasets/iris<span class="pl-pds">'</span></span>,

<span class="pl-s"><span class="pl-pds">'</span>anaconda-public-datasets/nyc-taxi<span class="pl-pds">'</span></span>,

<span class="pl-s"><span class="pl-pds">'</span>anaconda-public-datasets/reddit<span class="pl-pds">'</span></span>]

<span class="pl-k">>></span><span class="pl-k">></span> fs.info(<span class="pl-s"><span class="pl-pds">"</span>anaconda-public-datasets/iris/iris.csv<span class="pl-pds">"</span></span>)

{<span class="pl-s"><span class="pl-pds">'</span>Key<span class="pl-pds">'</span></span>: <span class="pl-s"><span class="pl-pds">'</span>anaconda-public-datasets/iris/iris.csv<span class="pl-pds">'</span></span>,

<span class="pl-s"><span class="pl-pds">'</span>LastModified<span class="pl-pds">'</span></span>: datetime.datetime(<span class="pl-c1">2017</span>, <span class="pl-c1">8</span>, <span class="pl-c1">10</span>, <span class="pl-c1">16</span>, <span class="pl-c1">35</span>, <span class="pl-c1">36</span>, <span class="pl-v">tzinfo</span><span class="pl-k">=</span>tzutc()),

<span class="pl-s"><span class="pl-pds">'</span>ETag<span class="pl-pds">'</span></span>: <span class="pl-s"><span class="pl-pds">'</span>"f47788bbfca239ad319aa7a3b038fc71"<span class="pl-pds">'</span></span>,

<span class="pl-s"><span class="pl-pds">'</span>Size<span class="pl-pds">'</span></span>: <span class="pl-c1">4700</span>,

<span class="pl-s"><span class="pl-pds">'</span>StorageClass<span class="pl-pds">'</span></span>: <span class="pl-s"><span class="pl-pds">'</span>STANDARD<span class="pl-pds">'</span></span>,

<span class="pl-s"><span class="pl-pds">'</span>type<span class="pl-pds">'</span></span>: <span class="pl-s"><span class="pl-pds">'</span>file<span class="pl-pds">'</span></span>,

<span class="pl-s"><span class="pl-pds">'</span>size<span class="pl-pds">'</span></span>: <span class="pl-c1">4700</span>,

<span class="pl-s"><span class="pl-pds">'</span>name<span class="pl-pds">'</span></span>: <span class="pl-s"><span class="pl-pds">'</span>anaconda-public-datasets/iris/iris.csv<span class="pl-pds">'</span></span>}

The point is, you do (almost) exactly the same thing for any of the several backend file systems, and you get the benefit of extra features for free:

open() and open_files() functions, the latter of which will expand glob strings automatically<span class="pl-k">>></span><span class="pl-k">></span> m <span class="pl-k">=</span> fsspec.get_mapper(<span class="pl-s"><span class="pl-pds">'</span>s3://zarr-demo/store<span class="pl-pds">'</span></span>, <span class="pl-v">anon</span><span class="pl-k">=</span><span class="pl-c1">True</span>)

<span class="pl-k">>></span><span class="pl-k">></span> <span class="pl-c1">list</span>(m)

[<span class="pl-s"><span class="pl-pds">'</span>.zattrs<span class="pl-pds">'</span></span>,

<span class="pl-s"><span class="pl-pds">'</span>.zgroup<span class="pl-pds">'</span></span>,

<span class="pl-s"><span class="pl-pds">'</span>foo/.zattrs<span class="pl-pds">'</span></span>,

<span class="pl-c1">...</span>]

<span class="pl-k">>></span><span class="pl-k">></span> m[<span class="pl-s"><span class="pl-pds">'</span>.zattrs<span class="pl-pds">'</span></span>]

<span class="pl-s"><span class="pl-k">b</span><span class="pl-pds">'</span>{}<span class="pl-pds">'</span></span>

<span class="pl-k">>></span><span class="pl-k">></span> fs <span class="pl-k">=</span> fsspec.filesystem(<span class="pl-s"><span class="pl-pds">'</span>s3<span class="pl-pds">'</span></span>) <span class="pl-c"># requires credentials</span> <span class="pl-k">>></span><span class="pl-k">></span> <span class="pl-k">with</span> fs.transaction: <span class="pl-c1">...</span> fs.put(<span class="pl-s"><span class="pl-pds">'</span>localfile<span class="pl-pds">'</span></span>, <span class="pl-s"><span class="pl-pds">'</span>mybucket/remotefile<span class="pl-pds">'</span></span>) <span class="pl-c1">...</span> <span class="pl-k">raise</span> <span class="pl-c1">RuntimeError</span> <span class="pl-k">>></span><span class="pl-k">></span> <span class="pl-k">assert</span> <span class="pl-k">not</span> fs.exists(<span class="pl-s"><span class="pl-pds">'</span>mybucket/remotefile<span class="pl-pds">'</span></span>)

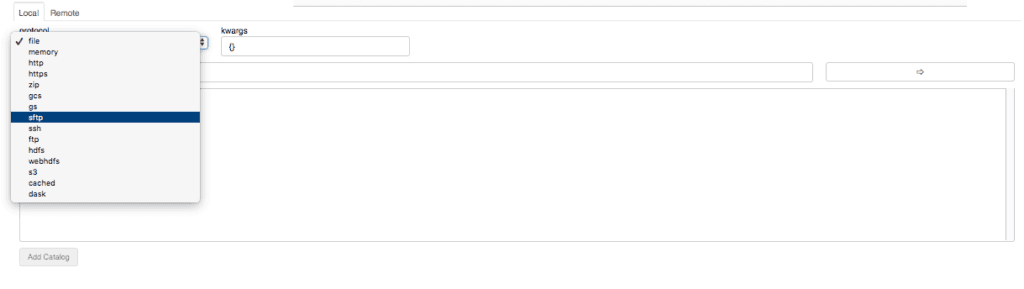

Intake now depends on fsspec for its file handling. Intake’s purpose is to ease the process of finding and loading data, so being able to browse any file system (or anything that can be thought of as a file system) is important. In the main GUI, you can now select amongst many possible backends, not just local files:

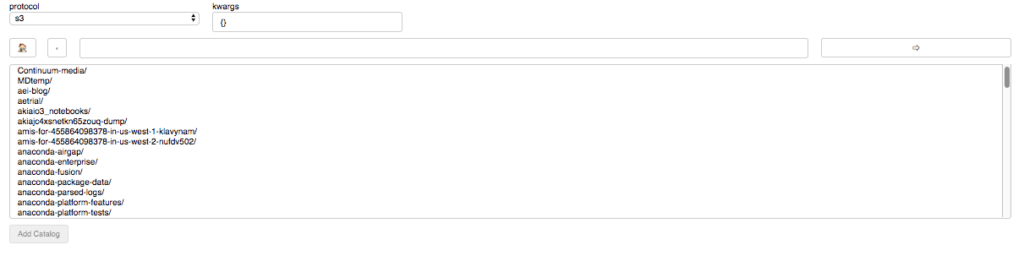

This allows you to select catalogs that may be remote, and have them load remote data too. Of course, you still need to have the relevant driver installed, and indeed to have access to a service matching the protocol (the kwargs box exists to add any additional parameters that the backend may need). For example, in the following image, I can browse S3, and see all of the buckets that I am an owner of as “directories”. This requires no extra configuration, since my S3 credentials are stored on the system.

A more subtle point is that a lot of the logic for how to deal with files is common across implementations. The fsspecpackage contains a spec for other file system implementations to derive from, making the process of writing new file system wrappers – which will be compatible with Dask, Intake, and others – a much simpler process. Such implementations will also inherit many of the for-free features.

Therefore, I invite all interested developers to get in touch to see how you might go about implementing your favourite file system.

Talk to one of our financial services and banking industry experts to find solutions for your AI journey.