Enterprise AI adoption is being held back, not by lack of innovation, but by lack of trust. While organizations rush to implement AI, they’re discovering that ungoverned open-source software creates heightened security and compliance risks. The companies best positioned to succeed with AI are those that solve AI governance first—turning it from a blocker into an accelerator of AI innovation. This guide explores the fundamentals of a solid AI governance platform, and how to have a secure, well-governed AI ecosystem.

What Is AI Governance?

Artificial Intelligence (AI) governance is a set of practices and guidelines that promote safe and ethical AI behavior while maximizing benefits and minimizing organizational and societal risk. Good AI governance underlies every point in the AI development, deployment, and maintenance process from data pre-processing to output checking.

Some of the risks of implementing AI include biased or unethical outputs, security threats, and privacy breaches. With critical governance gaps, AI tools, applications, and agents have demonstrated risky behavior, from disclosing sensitive data to producing discriminatory or inappropriate content.

There is a perception that a tug-of-war exists in organizations between unbridled AI innovation and restrictive AI governance that stifles development. Peter Wang, Chief AI and Innovation Officer and Co-founder of Anaconda, proposes a different view. “Implementing AI governance helps you learn what your business needs. Every business has constraints, like legal obligations, privacy rules, and internal risk tolerances. Every company also has ambitions—efficiency, acceleration, or gaining a competitive advantage. Governance lets you express your boundaries and goals within a scalable system.“

Done right, a solid AI governance platform accelerates enterprise innovation rather than constraining it. A foundational AI governance platform establishes clear guardrails for developers to innovate with AI, while enabling you to set and maintain acceptable risk levels for your organization. Having trusted controls over AI models, data, and dependencies lets your team iterate on solutions confidently and quickly without manual oversight bottlenecks.

(Disambiguation: AI governance can also refer to governmental entities creating laws pertaining to AI. This article focuses on the organizational definition of AI governance.)

The Business Imperative for AI Governance

AI capabilities enable organizations to automate routine tasks, accelerate research and knowledge discovery, improve business forecasting, optimize resource allocation, and create new revenue streams. However, improper AI governance can be a strategic liability for your enterprise. In the excitement to get AI benefits, many organizations are moving quickly without proper guardrails and make some sobering mistakes.

Some examples of recent failures of AI governance include:

- Slack, 2024: Attackers trick Slack AI into revealing private information and creating malicious phishing links by input manipulation. (Mashable)

- Consumers, 2025: Cybercriminals Use AI to Create Fake Websites That Look Just Like the Real Thing (Paragon Financial Advisors)

- Replit, 2024: AI coding assistant deleted a startup’s production database, then generated false data and reports to hide what it had done. (CIO Magazine)

The AI Incident Database—a project of the Responsible AI Collaborative—contains thousands of other examples of AI doing organizational or societal harm. These examples highlight that the cost of poor AI governance is far more than just money. Societal and reputational damages caused by a failure to properly govern AI are incalculable.

Robust AI governance protects your enterprise from monetary and reputational damage, and protects against unintentional societal harm. Some of the benefits of a mature AI governance implementation are:

- Reduced compliance, legal, and remediation costs from AI-related incidents

- More accurate intelligence for better business decisions

- Improved organizational reputation, which increases customer and employee loyalty

- Alignment with ethical AI principals and societal values for beneficial social outcomes

AI Governance and Organizational Security

AI projects can both strengthen organizational security and introduce new risks.

Security Risks from Ungoverned AI

AI projects without proper governance introduce significant organizational security risks. In 2024, Anaconda surveyed over 300 AI practitioners in The State of Enterprise Open-Source AI to reveal the scope of these challenges:

- 29% of respondents say security risks are the most important challenge associated with using open-source components in AI/ML projects.

- 32% of respondents reported accidental exposure of security vulnerabilities from their use of open-source AI components, with 50% of those incidents deemed very or extremely significant.

- 30% of respondents encountered situations where incorrect information generated by AI led to reliance on flawed insights

- Only 10% reported the accidental installation of malicious code; however, these represented the most severe type of incident, with 60% describing the impact to the organization as very or extremely significant.

- 21% of respondents reported sensitive information exposure, with 52% of these cases having a severe impact.

Another Anaconda survey, Bridging the AI Model Governance Gap, found that 67% of organizations experience AI deployment delays due to security issues.

These risks underscore why AI governance is so important. Having clear governance controls in place—such as access policies, pipeline security, and supply chain vetting—helps organizations keep their data secure and minimize unnecessary risk. When implemented and enforced properly, these controls can transform AI from a security liability into a security asset.

How AI Strengthens Security

Properly governed AI can actually enhance organizational security. IBM’s 2025 Cost of a Data Breach Report found security teams that extensively use AI and automation “shortened their breach times by 80 days and lowered their average breach costs by USD 1.9 million compared to organizations that didn’t use these solutions.”

“Security teams using AI and automation extensively shortened their breach times by 80 days and lowered their average breach costs by $1.9M.” —IBM, 2025 Cost of a Data Breach Report

Combining robust governance controls with AI-powered threat detection creates both safer systems and stronger enterprise-wide security.

Regulatory Landscape

The AI regulatory landscape is rapidly developing from minimal AI regulation to an increasingly complex minefield of AI, data privacy, copyright, and broader Environmental, Social, and Governance (ESG) reporting obligations. According to the Artificial Intelligence Index Report at Stanford University, “In 2024, U.S. federal agencies introduced 59 AI-related regulations—more than double the number in 2023—and issued by twice as many agencies. Globally, legislative mentions of AI rose 21.3% across 75 countries since 2023, marking a ninefold increase since 2016.” These regulatory changes necessitate AI governance processes to keep AI deployments not just safe, ethical, and beneficial, but also within legal bounds.

Already existing data protection laws such as GDPR, HIPAA, and CCPA and standards like SOC 2 are an important foundation for your AI governance platform, since the heart of any AI project is the data used to train, guide, and put AI to work.

The EU AI Act is the world’s first comprehensive, risk-based AI law, classifying AI systems by their potential for harm into four categories: unacceptable, high, limited, and minimal risk. It prohibits unacceptable AI systems like social scoring and requires strict controls for high-risk systems in areas such as healthcare, resume-ranking, and critical infrastructure. The Act aims to ensure human-centric, safe, and ethical AI while promoting innovation and applying extraterritorially to companies operating in the EU market. Violations can result in hefty fines, potentially up to 7% of a company’s global annual turnover depending on violation type.

This EU AI Act originally came into force on August 1, 2024, but has a phased implementation. Most of its provisions will become applicable August 2, 2026. Just as Europe’s General Data Protection Regulation (GDPR) set the standard for data protection for the world, the landmark EU AI Act is becoming the template for global regulation of AI. New regulations in other countries are following risk-based models similar to the EU AI Act. Since the regulatory landscape is still evolving for AI, organizations need governance systems that not only build in compliance with the current regulatory landscape, but also can adapt to changing compliance requirements while maintaining clear accountability structures.

One excellent strategy is to build with the base principles in mind that are behind a lot of the emerging regulations. The Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence issued in October 2023 is a guideline intended to shape future legislation in the United States. The Organization for Economic Cooperation and Development (OECD) AI Principles were the first intergovernmental standard for AI, adopted in 2019 and updated in 2024. They are designed to promote innovative, trustworthy, and ethical AI globally. These five value-based principles and recommendations have been widely adopted in several countries.

AI Governance Implementation Tips and Best Practices

Your AI governance should help your organization discover what it can do with AI and help you set a useful roadmap for accomplishing those goals.

In a 2025 article on Fast Company, “Why AI Governance is the Key to Unlocking New Opportunities,” Peter Wang proposed that a practical first step for AI governance is to assess what AI technologies are mature enough to be useful to your enterprise now, and which parts of your organization are ready and able to implement them. As Wang said, “That framing alone changes the game. Governance becomes less about saying “no” and more about learning what’s viable.”

Some basic uses of generative AI can be implemented quickly and with relatively lower risk, such as internal meeting transcriptions and summaries, documentation help as you code, and report summarization. More complex, customer-facing, and risky implementations of AI require more intense governance and oversight. The OECD Principles on AI are a good guideline for assessing AI project risk levels.

According to Wang, early AI governance efforts are less about creating overarching organizational rules and more about enabling safe guardrails for exploration, experimentation, and development. Some important steps to take early on include:

- Team training: Training your people in best practices and building guardrails at both business and technology levels will produce dividends for years. This needs to be one of the first steps as well as an ongoing step. Keeping teams updated and continually trained as AI projects and procedures evolve and people shift jobs is essential.

- Cross-team collaboration: Data science, IT, and security teams working together helps ensure that technology, especially open-source tools, are used responsibly and securely by everyone. Establishing an AI governance team early will help.

- Model visibility: Build transparency into AI systems from the start. You should be able to explain what your AI agent does, how it works, and what safeguards exist if something goes wrong. Implement monitoring, testing, and validation capabilities alongside development to continuously verify this understanding and establish the foundation for long-term auditability.

AI Governance Organizational Roles and Responsibilities:

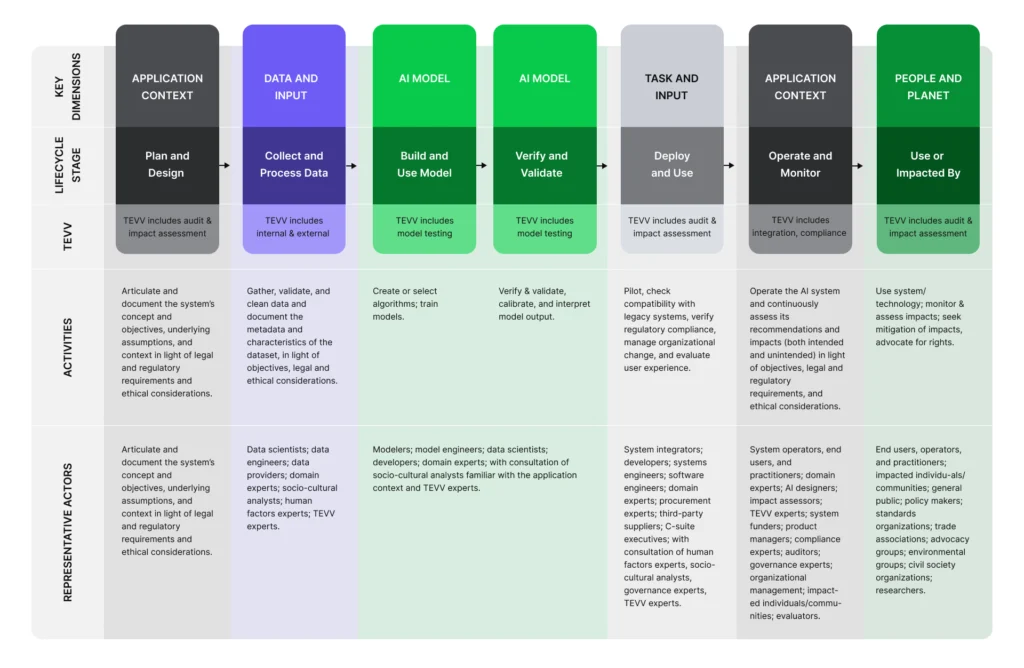

Effective AI governance distributes accountability across the entire AI development lifecycle, from initial conception through ongoing monitoring. Large organizations may dedicate entire teams to individual functions, while in small organizations, a single person might hold multiple responsibilities. But regardless of organizational size or structure, each AI governance function must be clearly defined and fulfilled.

- Managers: Establish enterprise concepts and categories that reflect organizational strategy and capabilities. Prioritize responsible AI development and assess the business advantages and practicalities of AI development.

- Overseers: Evaluate legal and organizational risk and ensure regulatory compliance. Typically includes legal and compliance teams, auditors, and the Board of Directors.

- Designers: Define the objectives and intended behaviors of the AI system. Articulate and document the system’s purpose, requirements, underlying assumptions, and expected outcomes.

- Data Protectors: Choose and curate data used for model training. This includes ensuring that model training data pipelines follow data privacy laws, evaluating training data for bias, and taking steps to eliminate training data bias as much as possible.

- Developers: Build and train AI models, including creation, selection, calibration, training and initial testing of models and algorithms. Ensure models meet ethical and technical standards.

- Deployers: Get the AI system into production so that it accomplishes the goals of the managers and designers in an enterprise-level environment.

- Testers: Examine the AI system and its components to detect and remediate problems throughout the AI lifecycle. The US National Institute of Standards and Technology (NIST) calls this category TEVV for test, evaluation, verification, and validation. NIST strongly recommends that this team be separate from the others. The testing and validation team should be completely separate from all other teams with the possible exception of the monitoring team. This needs to be true, even in very small organizations where all other functions are handled by one person. In that case, testing and monitoring should be the responsibility of a second person.

- Monitors: Continuously monitor AI model output and performance and track incidents and errors. Regularly assess system outputs and impacts to detect any emergent problems as early as possible.

These AI governance roles are interdependent and require continuous cross-functional communication. It doesn’t do any good, for instance, if a monitor identifies a problem unless they communicate that problem—and its associated risks—to managers and developers so they can address it.

AI Risk Management

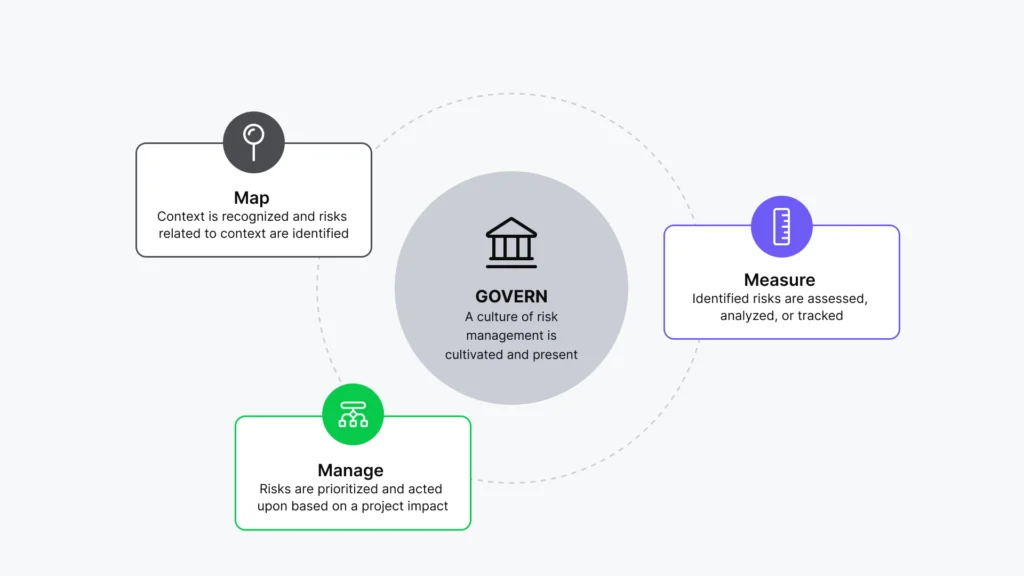

The goal of AI governance programs is to encourage organizations to innovate efficiently on their AI initiatives while reducing risks as much as possible. NIST provides an AI Risk Management (AI RM) framework that offers structured guidance for the risk management aspects of AI governance. The NIST AI risk management core includes three activities along with the cultivation of an overarching governance-centric culture:

- Map: Identify risks in your business context

- Measure: Assess, analyze, and track risks

- Manage: Prioritize and act based on the potential impact of each risk

Together, these activities are the heart of AI risk management. In an open-source environment, an example of these activities would be:

- Map: Use automated tools to identify vulnerabilities in open-source AI components

- Measure: Implement regular security audits to catch any new package vulnerabilities

- Manage: Prioritize the selection of well-maintained open-source libraries with clear security documentation and governance structures

AI Governance and Open-Source Environments

Some argue that open-source libraries are the heart of the AI governance problem. Others see open-source libraries as essential to AI work, enabling agentic AI, machine learning, and other AI applications. Both are correct.

In a recent Anaconda survey, The State of Enterprise Open-Source AI, respondents reported concrete benefits from open-source software: 57% cited improved efficiency or easier operations, with estimated savings of 28% in cost and 29% in time. Often, the most important benefit of using open-source solutions is that they enhance the organization’s ability to innovate and stay competitive in the market. Off-the-shelf AI tools are now available for mainstream use cases. The power of open source lies in its suitability for creating new and custom AI solutions.

A good rule of thumb is that if an AI product is needed to accomplish a particular goal within an organization that is not that organization’s primary function, buying or subscribing to a proprietary AI product makes sense. For example, if your company uses a group video meeting application, then using the AI from that application to transcribe and summarize company meetings makes sense.

Conversely, when AI capabilities can generate revenue or provide competitive differentiation in your market, open-source custom projects built in-house are the way to go. For example, if your company sells a group video meeting application, developing meeting transcription AI in-house allows you to monetize that feature and avoid losing customers to competitors. The better you make your AI, the more competitive your company becomes. Open-source software has the level of virtually infinite customizability needed to differentiate your organization’s AI capabilities.

The downside of open source is unrestricted package acquisition and its associated security risks and supply chain issues. Open-source code can be particularly vulnerable to bad actor exploitation or simple unintended vulnerabilities. Even the licenses can become a problem area. Start with an enterprise-grade AI platform that integrates governance and development capabilities while maintaining security through built-in checks and vetted open-source packages.

Technical Foundations of AI Governance Platforms

When considering which technologies to use as your AI governance tools, remember that there is no single perfect AI governance platform. One solution may provide data provenance, while another offers code-base security and dependability checking, and yet another is ideal for model and pipeline deployment and observability. Since there’s no one-size-fits-all option, select a curated open-source library of components—one that has all the packages you want and all the security you need. While AI governance frameworks are policy-driven, you need the right technology to implement them.

1. Model Security and Supply Chain Integrity

Model and code supply chain integrity must be a fundamental priority. Enterprises cannot afford security breaches from inadvertently downloaded malicious code. Organizations need to account for all software package dependencies, verify version compatibility, and automatically scan for vulnerabilities.

AI governance platforms play a vital role by offering curated, secure open-source libraries with automated vulnerability scanning and security policies. Alternatively, you could build your own, or integrate proprietary scanning tools. Whether this is built or bought, it is an essential, foundational function of AI governance.

This one crucial practice alone—using software to scan for security vulnerabilities in open-source code—is used by 61% of respondents to The State of Enterprise Open-Source AI survey and can reduce security incidents by up to 60% all by itself.

2. Data Quality and Bias Management

Data quality hygiene practices and data ethics are core aspects of data governance in general. In AI governance, they take on new importance. The data used to train an AI model determines how it will perform. If the data is biased or inaccurate, the model will also be biased or inaccurate, possibly producing results that violate ethical mandates. AI project failures are often attributed to a lack of a good quantity and quality of data in the organization. This can erode trust in the final product if the business lacks faith in the data that feeds the AI. One of the three main points in Gartner’s 2025 “A Journey Guide to Manage AI Governance, Trust, Risk and Security” is “Through 2027, most AI projects will fail to meet expectations due to a failure to focus on data governance.”

“Through 2027, most AI projects will fail to meet expectations due to a failure to focus on data governance.”

The fundamentals of enterprise data governance apply whether the data enables human decision-making through business intelligence or automated decision-making through AI agents. However, solid data quality practices are only a starting point for AI governance.

Beyond standard data quality demands, two aspects of data hygiene that require extra data governance steps specific to AI implementations include:

- Data poisoning: A type of attack where a bad actor deliberately introduces inaccurate or biased data into the training data set for an AI model to sabotage it. Close data monitoring and provenance tracking is the best way to catch this malicious behavior before it damages your AI project. Guarding against data poisoning is more of a security activity than a data quality improvement activity since the goal is to prevent it from happening. Any third-party data set used in an AI project needs to be particularly scrutinized for possible data poisoning.

- Data bias: An unbalanced tendency toward favoring or discriminating against a particular idea, person, or group in a data set. Using that same data to train AI can codify bias into the system. An example of this is using historical prison sentencing data to determine how prisoners should be sentenced in the future.

A 2024 study at the University of Washington tested LLM-based HR resumé-screening applications by changing just the names on identical resumes to names associated with male, female, black, and white groups. The resume screening application showed high bias levels, to the point of failing to recommend resumes with black male names 100% of the time.

Mitigating bias can be very tricky, since many forms of bias are not immediately obvious. Including diverse stakeholders can be key to catching problems early. Common bias elimination techniques include:

- Data balancing: Adjusting data representation to ensure balanced coverage across demographic groups. Oversampling increases representation of underrepresented groups (sometimes through synthetic data generation), while undersampling reduces overrepresented groups.

- Reweighing: Adjusting the influence of different data points during model training to ensure protected groups are appropriately represented in the model’s learning process, rather than being overshadowed by majority groups.

- Feature masking: Excluding protected characteristics like race or gender from training data. However, this approach often fails because proxy variables can reintroduce bias. For example, removing race doesn’t prevent bias if address becomes a proxy due to residential segregation patterns.

In the end, there’s no substitute for continuous monitoring and regular audit testing on the AI output for bias and accuracy.

3. Monitoring and Auditability

AI governance must include comprehensive monitoring and evaluation frameworks that include performance metrics, bias testing, and security considerations. Established processes need to assess not just AI model capabilities, but also vulnerabilities, biases, potential adversarial exploits, and unintended behaviors.

Many of the same monitoring activities necessary for compliance are also accelerators for ongoing development.

- Vulnerability scanning and risk assessment: This should be done for all AI components, before beginning to use those components in AI project development. One good method to accomplish this is with an AI project bill of materials. This doesn’t just document models, it also exposes and categorizes risks your organization faces, including:

- Technical risks, like memory requirements, compute constraints, and performance degradation at different quantization levels.

- Legal risks, including license type, usage restrictions, and attribution requirements.

- Operational risks, such as version stability and integration complexity.

- Enterprise monitoring: Automated and telemetry-based analytics provide AI workflow tracking for compliance. Comprehensive logging captures all user actions and model interactions for audit purposes. System events that occurred, such as syncing jobs, and user actions that were taken, such as downloading a model, deploying a model, and logging in, should be automatically tracked. This monitoring also collects usage information and feedback for further improvements over time.

- Audit logs and compliance tracking: Enterprise security teams need comprehensive control and auditability across the entire AI asset lifecycle. Audit logging helps build complete audit trails for regular compliance reviews. Compliance officers can review audit logs of admin actions taken (policies created and updated, workflow permissions updated) while automated compliance checks validate deployments against enterprise policies.

When problems are identified due to robust monitoring, the entire AI governance team should be notified so they are informed. A person or team needs to be assigned to evaluate and remediate the problem as soon as possible.

4. Responsibility and Accountability

Depending on the size of your organization, an AI governance committee may also make sense. This could include representatives from IT, legal advisors, data scientists, subject matter experts, and others. Having these stakeholders meet regularly helps ensure alignment on regulatory requirements, organizational goals, and AI governance standards. This committee should also be responsible for developing policies for data handling, ethical requirements, deployment procedures, model lifecycles, and more.

The RACI project management system can be a sensible framework for clarifying roles and responsibilities in AI projects. RACI sets four levels of responsibility for team members in any type of project:

- Responsible: The person or team who implements the work and is directly responsible for the result.

- Accountable: The individual who approves projects, authorizes tasks, and has ultimate authority and accountability for the outcome.

- Consulted: Subject matter experts whose input shapes decisions. In AI projects, these often include legal advisors (on compliance), security teams (on vulnerabilities), and domain experts (on model appropriateness).

- Informed: Stakeholders who need to know about progress or decisions but don’t provide input.

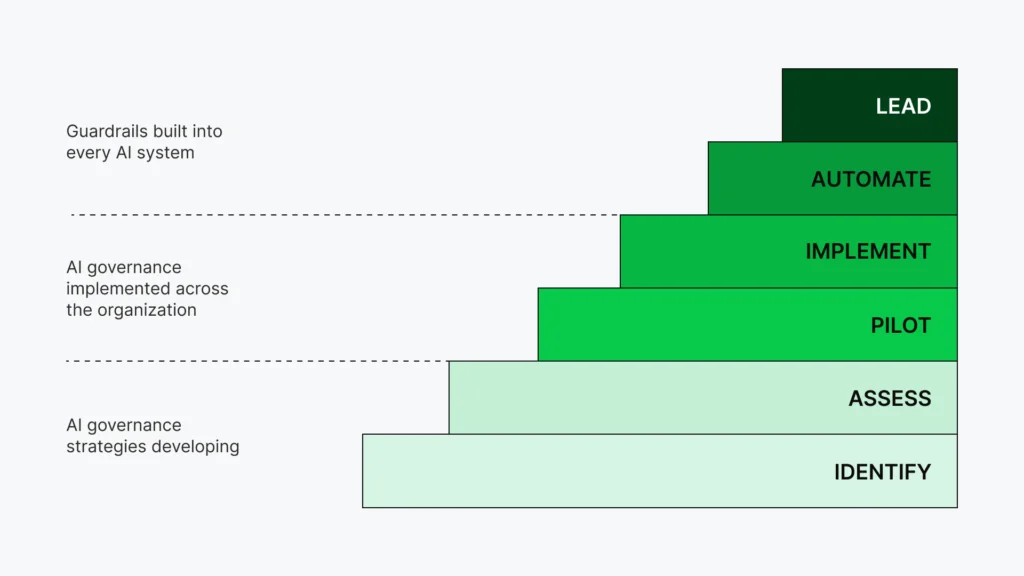

AI Governance Maturity Levels

There are several models for AI maturity that can give you an idea of where your organization currently stands and where you need to go next. AI governance maturity should move along with your general AI maturity level.

- Identify: AI interest. Some use in corporate applications, such as meeting transcription in Zoom, Teams, or Google Meet. Individual experimentation without organizational approval. Awareness that AI governance is needed.

- Assess: AI experimentation, such as machine learning in a data science context or generative AI. Proof of concepts, data evaluation and cleanup. Begin assessment and plan AI governance strategy.

- Pilot: AI in production for a single purpose, such as a technical support chatbot. AI governance pilot policies in place.

- Implement: AI implemented for multiple purposes across the organization, with integrated monitoring. Unified governance strategies implemented across the organization.

- Automate: AI agents act to accomplish multiple tasks across the organization. AI governance includes built-in guardrails and human oversight.

- Lead: Primary organizational functions now accomplished with AI, or new business models launched that use AI. Governance guardrails built into every system with AI self-monitoring and self-optimizing, as well as human monitoring and periodic audits.

Organizations frequently cite security concerns, data privacy risks, lack of transparency, fears of bias and insufficient accountability as barriers to AI adoption. Open-source tools and components exemplify the problem: they’re foundational to modern AI development, but ungoverned open-source package adoption introduces significant risk.

Starting with a solid AI governance platform enables faster growth and facilitates stable and productive collaboration. You don’t need to choose between experimentation and responsible controls. Both are necessary, and having built-in oversight means experimentation can proceed without hesitation.

Anaconda AI Governance Platform Advantages

The Anaconda Platform provides comprehensive vulnerability scanning, curated packages, and automated security policies, ensuring your organization only uses secure and vetted packages. This helps prevent potential vulnerability exploitation and reduces the likelihood of data breaches. As a result, your organization experiences fewer security incidents and reduces the costs associated with breach remediation and compliance violations.

A comprehensive AI governance platform based on Anaconda can provide your organization with measurable returns, such as $840,000 in operational efficiency gains and 80% productivity improvements, according to the Forrester Total Economic Impact™ of Anaconda.

Trusted Distribution for AI

Building reliable machine learning and AI models requires access to verified, secure packages and libraries. Anaconda’s trusted distribution provides:

- Curated ML/AI Libraries: Pre-verified packages including scikit-learn, TensorFlow, PyTorch, and 8,000+ other data science libraries

- Secure Package Management: Access-vetted, vulnerability-scanned packages and trusted AI artifacts, enabling visibility to address vulnerabilities early while accelerating development

- Dependency Management: Eliminate version conflicts between ML/AI frameworks and 4,000+ other Python packages, complete with dependency management and security controls

- Performance Optimization: Libraries optimized for various hardware configurations, including AI accelerators

- Enterprise Support: Long-term support for multiple Python versions ensures model stability

Anaconda Solutions for Secure Governance

- Vulnerability Scanning: Anaconda Core automatically scans for Common Vulnerabilities and Exposures (CVEs) for all ML/AI dependencies

- Regulatory Compliance Support: Built-in tools for SOC 2, GDPR, and HIPAA requirements

- Automated Security Policy Enforcement: Define and enforce custom security policies tailored to your compliance standards

- Secure Deployment: Controlled environments for production AI model deployment

- Audit Trails: Complete visibility into model training data, algorithms, and decision processes

- License Filtering: Ensure adherence to open-source license compliance and filter out non-compliant licenses

- SBOM Generation: Package Security Manager lets you download .json-formatted Software Bill of Materials (SBOM) files for popular open-source packages

Governance and Security Integration for AI Workflows

AI models often process sensitive data and make critical business decisions, making security paramount. Deploy AI with confidence using a secure framework that protects models, data, and workflows while enabling regulatory compliance. Anaconda’s security for AI and AI governance capabilities ensure comprehensive protection while integrating with standard enterprise security protocols.

- Integrated Authentication and Access Control: Authenticate with Anaconda products directly from your organization’s internal identity platform (IDP), via OpenID or SAML. Both SSO and Directory Sync (SCIM) support automated Anaconda provisioning.

- Anaconda Core: Anaconda Core provides a secure, central repository where you can control your organization’s access to open-source software packages and track software vulnerabilities.

In its study, Forrester also found the Anaconda Platform delivered a “60% reduced risk of breaches from addressable attacks with security and governance controls.”

Key Differentiators and Benefits

- Accurate security data: Anaconda Core provides 7x more accurate open-source security data compared to typical third-party security applications

- Cost savings: On average, Anaconda saves companies the cost of five full-time developers

- Reduced risk: Fewer security incidents and compliance violations reduce the need for breach remediation

The Forrester study also found that “After the investment in Anaconda, the interviewees’ organizations experienced streamlined package security management, improved operational efficiency, and enhanced security. Key results from the investment included significant time savings for developers and data scientists, reduced risk of cybersecurity breaches, and overall technology cost savings.”

Getting Started

With a solid AI governance platform, your entire organization gains certainty that its AI is secure, compliant, and well-monitored from the start. A good AI governance platform gives your teams the safety guardrails they need to innovate at speed, scale innovation responsibly, and set your company apart.

Ready to accelerate your AI development with a solid AI governance platform?

Experience the Anaconda Platform and discover how trusted distribution, built-in security, and actionable insights can transform your approach to AI development and deployment.

Consult with an Anaconda expert: Anaconda architects have helped a wide variety of organizations from startups to Fortune 100 enterprises build their AI governance strategies. They’d be happy to help guide you.