One reason why Python is great for data science and AI is that it can easily be extended with faster, compiled languages like C, C++, and Rust. Much of NumPy, for example, is written in C, but still has a Python interface, making it performant while still being easy to use.

AI and machine learning workflows in particular depend on these binary libraries, but Python’s packaging ecosystem wasn’t originally designed to manage these compiled dependencies. Conda emerged from the scientific Python community specifically to solve this challenge.

If you’re doing web development with pure Python packages (like Django or requests), pip or uv may offer all the support you need. But for data science and AI work, where binary dependencies are unavoidable, conda’s architecture makes a critical difference. And when you’re deploying these workflows to production with security, compliance, and stability requirements, Anaconda provides the professional layer that enterprises need.

In this blog article, we’ll explain how conda solves the binary dependency challenges that can trip up pip- and uv-based workflows. Then, we’ll explore why these technical capabilities matter for enterprise deployment and how Anaconda’s professional management layer adds value on top of conda’s architectural foundation.

How Conda Solves Python’s Binary Dependency Challenge

Python’s strength in AI and data science comes from wrapping high-performance compiled libraries (written in C, C++, or Rust) in easy-to-use interfaces. NumPy needs BLAS, Pillow needs imagecodecs, TensorFlow needs CUDA to run on GPUs. But Python’s package ecosystem wasn’t originally designed to manage these compiled dependencies.

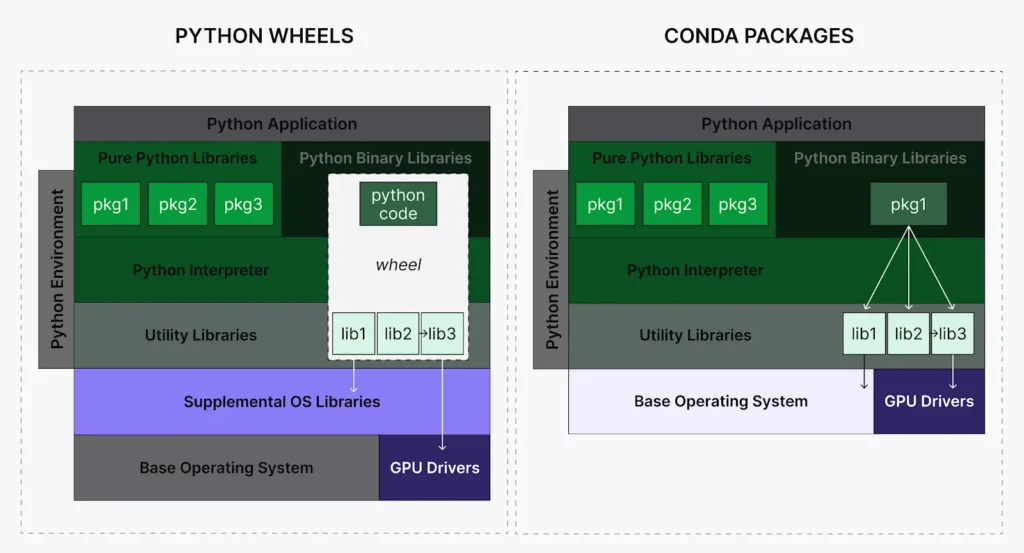

Pip and uv most frequently get packages (wheels) from PyPI. For pure Python packages, like Django, wheels work great. But for compiled packages, wheels can present two critical problems:

Challenge 1: Hidden security vulnerabilities. When Python wheels contain compiled components, these non-Python components don’t necessarily show up in package metadata (like requirements.txt files) and can be missed by software composition analysis software. There is an initiative to include a software bill of materials (SBOMs) to address this—however, this is at the discretion of those maintaining the software.

Challenge 2: Runtime failures due to binary incompatibilities. Pip’s resolver primarily focuses on Python-level dependencies. It can’t validate binary compatibility nor install non-Python packages, so pip install could succeed even when packages contain incompatible libraries or are missing needed dependencies. These conflicts only surface at runtime as segfaults or mysterious crashes.

Conda packages built by Anaconda are designed to address both problems by treating binary libraries as explicit dependencies. Every component becomes visible to security scanners and any required updates are surgical, like conda update libwebp. The solver helps identify Application Binary Interface (ABI) incompatibilities before installation, so incompatibilities are caught upfront, not at 3AM in production. For more on how conda’s architecture differs fundamentally from pip, see Conda ≠ PyPI: Why Conda Is More Than a Package Manager.

Why This Architecture Matters for Enterprise Python

Conda’s architectural advantages matter most for data science, AI, and scientific computing because these workflows depend heavily on compiled libraries where pip’s and uv’s limitations often create the biggest problems. Additionally, these compiled libraries come with more risk vs. pure Python packages. Anaconda transforms this foundation into enterprise capabilities that reduce risk while accelerating delivery.

In the following sections, we’ll dive into four foundational capabilities of Anaconda Core:

- Coordinated distribution for environments that are designed to work reliably from development to production

- Vulnerability management that works at enterprise-scale

- Supply chain protection with professional oversight

- Governance controls that enable automated compliance

Coordinated Distribution for Reliable Environments

The package coordination problem: Community repositories often treat packages as independent units. When one maintainer updates OpenSSL, another updates NumPy, and a third updates SciPy—all without coordination—your environment could break in production.

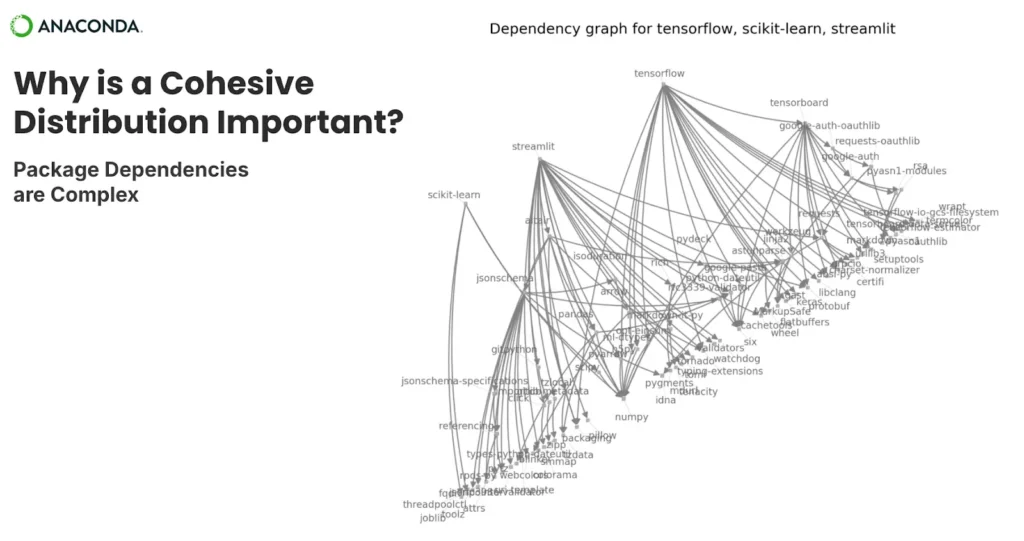

Modern data science and AI workflows depend on intricate webs of interconnected packages. TensorFlow, scikit-learn, and Streamlit each pull in dozens of dependencies. When maintainers update these packages independently, the complexity becomes challenging to manage.

Distribution as a product: We manage packages as a unified product, not independent components. When we update fundamental libraries, we rebuild and test dependent packages together. ABI compatibility management prevents binary interface conflicts. Conda’s built-in dependency resolution prevents conflicts before installation. Platform-native builds on Windows, Linux, and macOS mean packages actually work on the platforms where you deploy them.

For even broader ecosystem access, the Anaconda Community Channel provides more than 17,000 additional packages selected from conda-forge based on compatibility with Anaconda Distribution. These curated packages work alongside the defaults channel and are all pure Python, so you still get the security benefits of relying on Anaconda’s compiled foundation.

The result: You can update confidently because packages are tested together. Cross-platform deployments work seamlessly, and the Community Channel provides vetted access to thousands more packages on top of Anaconda’s secure foundation—all tested for compatibility.

Vulnerability Management

September 2023: CVE-2023-4863 exposes a critical vulnerability in libwebp. For conda users, the response was straightforward—Anaconda published an expedited update to the libwebp package. Every project depending on it (like Pillow, opencv-python, imageio, rasterio) continued working because they shared the same, now-patched library.

For PyPI users, the same vulnerability meant instead waiting on individual package maintainers to rebuild wheels for all affected packages and then individually updating all those packages. Though the community responded swiftly, it was estimated ~50 projects were affected in total. More than 16,000 wheels bundle libwebp on PyPI today—and this pattern repeats across the ecosystem with more than 70,000 wheels bundling OpenSSL and more than 530,000 hidden libraries in various folders.1

Anaconda’s approach: We manually validate Critical and High CVEs to reduce false positives that waste security teams’ time. Our vulnerability reports account for how we actually built each package. When we separate dependencies or use dynamic linking, our data reflects those decisions. We actively monitor CVE disclosures and prioritize updates across the entire distribution.

The result: You’re automatically notified when CVEs emerge, so you and your team can quickly respond to potential risks. When a fix is available, you update the affected library once—not hundreds of times across dozens of packages.

Supply Chain Protection

While PyPI has taken steps to improve security, including mandatory two-factor authentication for maintainers, PyPI’s decentralized publishing model presents different security considerations than centralized build systems. Anyone with an authenticated account can publish packages built anywhere. Recent attacks include typosquatting campaigns where attackers exploit simple typos in package names—attackers publish malicious packages named “reqeusts” instead of “requests,” tricking users into installing compromised code.

Beyond typosquatting, the build process itself presents an additional surface area for attacks. Attackers can inject malicious code after source control through compromised build processes and platforms (like the Codecov breach where attackers modified build scripts to steal credentials). PyPI’s decentralized model means each package’s security depends entirely on individual project practices—compromising a single maintainer’s machine can compromise their packages.

Anaconda’s difference: Vetted employees build packages in controlled environments with role-based access controls. Source integrity verification through cryptographic hash checking means builds fail if code has been tampered with. Separated build and release environments prevent credential compromise. All packages undergo virus scanning, and cryptographic signatures protect against tampering.

The result: A curated, secure repository for open source software. Rather than thousands of independent contributors, you get one professional team following consistent security protocols. Professional oversight catches problems before they are released. When issues do arise, formal incident response ensures a rapid resolution.

Governance Controls

Hidden software components make compliance automation nearly impossible. When more than 530,000 PyPI wheels bundle libraries that scanners may not detect, your compliance tools could produce incomplete results. License information is sometimes missing or inconsistently formatted, making it impossible to automatically enforce policies.

With Anaconda, governance is embedded automatically, helping teams move faster.

Anaconda makes every component visible: SBOMs come standard across our distribution—not as an emerging option you hope maintainers adopt, but as built-in capability. Software Package Data Exchange (SPDX) standardized license expressions in machine-readable format mean your tools can automatically scan for license compliance across thousands of packages and detect licenses, such as GNU general public license (GPL), that may not be compatible with your organization’s policies before they reach production. You can identify potentially incompatible license combinations across your dependency tree and enforce organization-wide policies without manual review of every package.

The result: Stronger security with less effort. You pass security audits faster and spend less time manually defining and enforcing policies. Rather than slowing you down, governance is embedded automatically, so you can drive results faster.

Conclusion

Conda’s architecture solves Python’s binary dependency challenge: Treating libraries as explicit dependencies makes vulnerabilities visible and enables surgical updates.

Anaconda adds professional management on top. Environments work reliably from development to production. Vulnerabilities are actively monitored. Packages are vetted and validated for supply chain protection. Governance is embedded automatically with SBOMs, standardized licenses, and organization-wide policies.

The right tool depends on your reality. Pip and uv work well for pure Python applications. Conda’s architecture becomes essential when binary dependencies enter the picture. Anaconda adds the professional oversight that production environments demand.

Communities excel at innovation. Enterprises need reliability. Conda provides the architecture. Anaconda provides the professional management layer.

1 Through analyzing data from PyPI (as of September 2025), we can quantify how broadly libraries like libwebp and OpenSSL are bundled within wheels. 16,424 wheels across 180 projects have bundled the libwebp binary; 70,872 wheels across 802 projects have bundled the OpenSSL binary; 532,105 wheels across 5,332 projects have bundled libraries in a folder called .libs which is the most common method for bundling these libraries.