As data scientists, ML engineers, and developers, we’ve all faced the frustration of environment setup. The hours spent configuring dependencies, resolving conflicts, and ensuring everything works correctly are hours not spent on actual analysis and model development. Anaconda’s expanded quick start environments aim to eliminate this pain point entirely.

What Are Quick Start Environments?

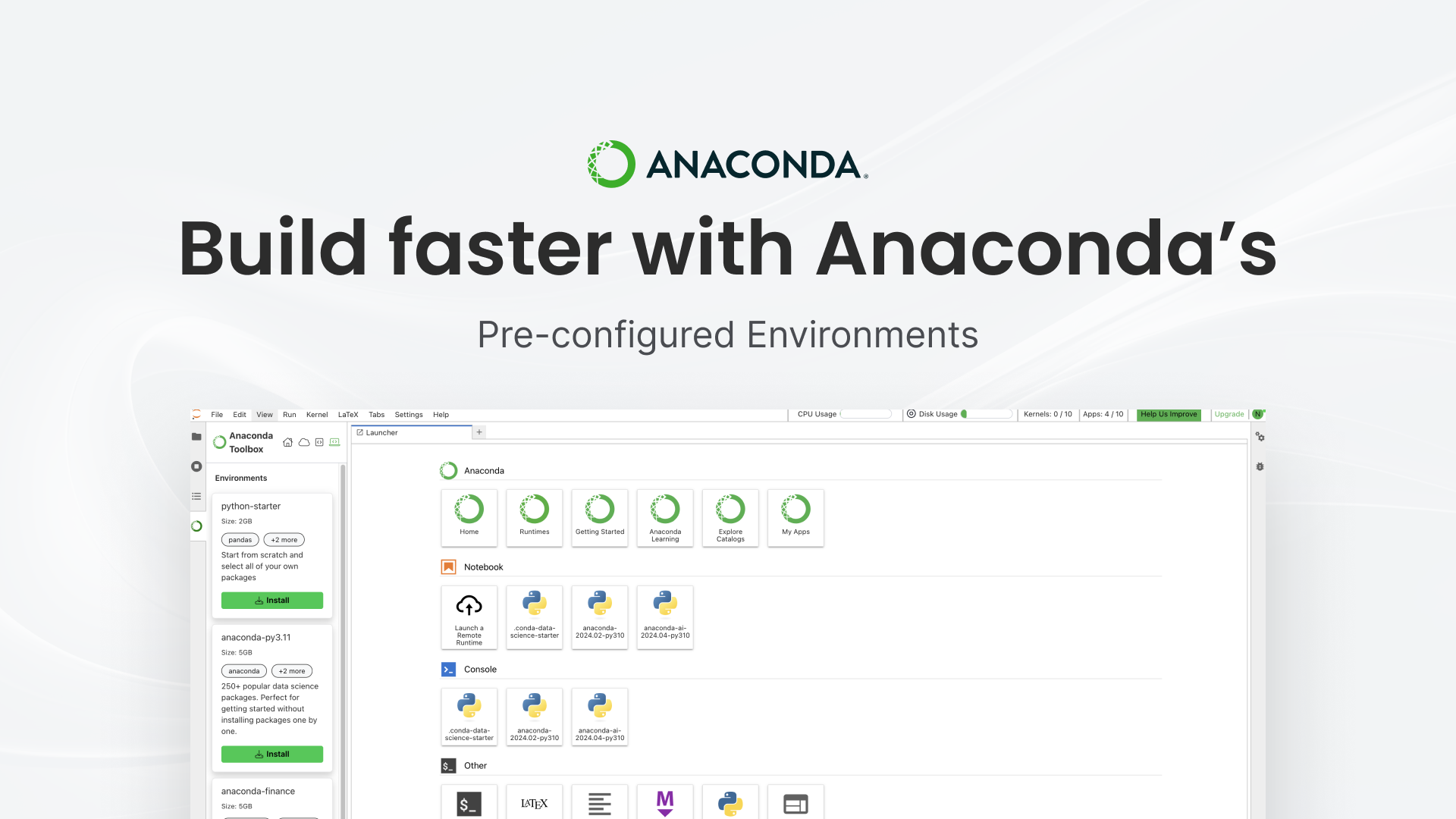

Quick start environments are pre-configured environments for Jupyter Notebooks in the Anaconda AI Platform (or any IDE that supports conda environments) and include commonly used packages for specific use cases. Each environment contains a set of tested, compatible packages designed to reduce configuration complexity and provide functional setups for common workflows.

Think of them as pre-assembled toolkits, ready to use the moment you need them, with components tested for compatibility. Check out the video below to see quick start environments in action, and read on to learn more about our expanded collection of quick start environments.

How to Access Quick Start Environments

Accessing Quick Start Environments is straightforward within the Anaconda AI Platform:

- Install Anaconda Toolbox from Navigator (or using

conda install anaconda-toolbox), or update to v4.20+ (conda update anaconda-toolbox) if you have it installed already. - Log into the Anaconda AI Platform

- Open Jupyter Lab or Notebooks

- Open the Anaconda Toolbox and select the “Create New Environment” option.

- Launch your chosen environment with a single click

The entire process takes minutes rather than the hours often required to configure environments manually. These new preconfigured environments are available to all Anaconda AI Platform users across all tiers. Once the quick start environment is downloaded, users can use them in JupyterLab, Notebooks, or any IDE that supports conda environments, like VS Code or PyCharm.

Expanded Quick Start Environments Collection

We’ve significantly expanded our quick start environments to cover a broader range of use cases and industries. Here’s our complete collection:

Core Development Environments

1. Python Starter Environment (Available for Free, Starter, and Business tiers)

This environment provides essential Python tools and libraries for general development work, perfect for both beginners and those who need a clean, lightweight environment that they customize with full control over the packages they add.

What’s included: Core Python packages, basic data handling libraries, and fundamental development tools with minimal dependencies.

Use case example: Sarah, a data analyst transitioning from Excel to Python, uses the Python Starter environment to learn programming fundamentals without being overwhelmed by specialized packages. She can focus on mastering Python basics while having just enough tools for data manipulation and visualization, creating a smooth learning curve that builds her confidence.

2. Anaconda Distribution Environment (Available for Free, Starter, and Business tiers)

The comprehensive environment containing the full suite of Anaconda’s curated data science and machine learning libraries—equivalent to the classic Anaconda distribution. The Swiss-Army knife of environments that can be used for everything from Data Visualization, to complex Machine Learning tasks.

What’s included: NumPy, pandas, scipy, Matplotlib, Plotly, and hundreds of other data science packages, all pre-configured to work seamlessly together.

Use case example: Miguel, a seasoned data scientist working across multiple projects, relies on the Anaconda Distribution environment as his go-to workspace. When asked to quickly analyze a customer churn dataset, he doesn’t waste time installing packages—he simply launches this environment and immediately begins exploring data patterns, fitting models, and generating visualizations for stakeholders, all within minutes of receiving the data.

AI & Machine Learning Environments

3. AI/ML Starter Environment (Available for Free, Starter, and Business tiers)

A focused collection of essential machine learning and AI development tools, optimized for performance and compatibility.

What’s included: TensorFlow and PyTorch, along with fastai, supporting visualization tools, preprocessing libraries, and model evaluation frameworks.

Use case example: Kelly, an ML engineer, receives a request to build a prototype image classification model by the end of the day. Rather than spending valuable time configuring deep learning frameworks, she launches the AI/ML Starter environment and immediately begins adapting a convolutional neural network to her specific dataset.

4. PyTorch Environment (Available for Business tier)

A specialized environment for deep learning development using the PyTorch framework. This environment provides the complete PyTorch ecosystem with pre-configured GPU support and popular deep learning libraries.

What’s included: PyTorch, torchvision, torchaudio, transformers, tokenizers, datasets, and supporting libraries for deep learning development.

Use case example: Marcus, a computer vision researcher at a robotics company, needs to develop object detection models for autonomous navigation systems. Using the PyTorch environment, he can immediately access pre-trained models through torchvision, fine-tune them on his custom dataset, and experiment with different architectures. The pre-configured environment eliminates the notorious PyTorch installation challenges, allowing him to focus on model performance rather than environment troubleshooting.

5. Natural Language Processing Environment (Available for Business tier)

Comprehensive toolkit for text analysis, language processing, and large language model integration. This environment brings together powerful NLP libraries with modern transformer models and LLM frameworks.

What’s included: NLTK, spaCy, scikit-learn, wordcloud, transformers, torch, TensorFlow, and langchain for advanced NLP workflows.

Use case example: Alex, a content strategist at a media company, needs to analyze thousands of social media posts to understand public sentiment about recent product launches. Using the NLP environment, he can immediately start processing text data with spaCy for entity recognition, apply sentiment analysis using pre-trained transformers models, and create compelling word clouds for executive presentations. The integrated LangChain tools also allow him to experiment with LLM-powered content summarization, turning hours of manual analysis into minutes of automated insights.

Industry-Specific Environments

6. Finance Environment (Available for Free, Starter, and Business tier)

A specialized environment configured for financial analysis, modeling, and visualization with libraries selected for financial applications.

What’s included: statsmodels, scikit-learn, quantstats and other specialized packages for time series analysis, risk modeling, portfolio optimization, and financial visualization alongside core data science tools.

Use case example: Mike, a quantitative analyst at an investment firm, uses the Finance environment to rapidly prototype trading strategies. Instead of spending hours configuring specific financial packages, he can immediately leverage specialized tools for market data analysis and portfolio optimization.

7. Banking Environment (Available for Business tier)

A specialized environment for banking applications including credit risk modeling, regulatory compliance, and fraud detection use cases. This environment provides machine learning and statistical tools commonly used in banking.

What’s included: XGBoost, LightGBM, imbalanced-learn, and specialized packages for financial modeling and risk assessment.

Use case example: Jennifer, a senior risk analyst at a regional bank, needs to update the institution’s credit scoring model to comply with new regulatory requirements. Using the Banking environment, she can immediately access XGBoost for building robust gradient boosting models, apply imbalanced-learn techniques to handle the skewed nature of default data, and use specialized statistical packages for stress testing scenarios.

8. Insurance Environment (Available for Business tier)

Designed for actuarial modeling, claims analytics, and catastrophe risk assessment use cases. This environment includes probabilistic and statistical tools commonly used in modern insurance analytics.

What’s included: PyMC, ArviZ, patsy, and specialized packages for actuarial science and risk modeling.

Use case example: Robert, a senior actuary at a property insurance company, needs to model hurricane risk exposure for the upcoming season. Using the Insurance environment, he leverages PyMC for Bayesian modeling of catastrophe frequency and severity, uses ArviZ for model diagnostics and visualization, and applies specialized statistical packages for claims triangulation. The environment’s pre-configured probabilistic modeling tools allow him to build sophisticated risk models that would typically require weeks of package installation and compatibility testing.

9. Life Sciences Environment (Available for Business tier)

A toolkit for genomics, bioinformatics, and molecular analysis curated for pharmaceutical and life sciences research applications. This environment provides the specialized tools commonly used for modern computational biology workflows.

What’s included: BioPython, scipy, scikit-learn, NetworkX, and specialized packages for genomics and molecular analysis.

Use case example: Dr. James Park, a computational biologist at a pharmaceutical company, is tasked with identifying potential drug targets from genomic data. Using the Life Sciences environment, he can immediately access BioPython for sequence analysis, apply NetworkX for protein-protein interaction network analysis, and use specialized packages for molecular modeling. When his team discovers a promising compound, he can quickly transition to analyzing its molecular properties and potential interactions, accelerating the drug discovery pipeline from months to weeks.

10. Manufacturing Environment (Available for Business tier)

Industrial IoT, predictive maintenance, and quality control analytics curated for smart manufacturing and process optimization systems. This environment provides tools used in modern industrial data science and automation.

What’s included: FastAPI, Celery, APScheduler, Prophet, and packages for industrial data processing and predictive maintenance.

Use case example: Carlos, a process engineer at an automotive manufacturing plant, needs to implement predictive maintenance for critical assembly line equipment. Using the Manufacturing environment, he can immediately set up real-time data collection using FastAPI to interface with industrial sensors, apply Prophet for time series forecasting of equipment degradation, and use Celery for distributed processing of maintenance alerts. The pre-configured industrial IoT packages allow him to deploy a sophisticated maintenance system that prevents costly production downtime, transforming reactive maintenance into proactive optimization.

Technology Integration Environments

11. Snowflake Environment (Available for Business tier)

Native Snowflake connectivity with data processing, analytics, and machine learning for cloud data platforms. This environment provides integration with Snowflake’s data cloud ecosystem.

What’s included: Snowflake Snowpark Python, Snowflake Connector, Snowflake ML Python, Streamlit, and data processing packages optimized for Snowflake workflows.

Use case example: Patricia, a data engineer at a retail analytics company, needs to build ML models that run directly within Snowflake’s cloud data platform. Using the Snowflake environment, she can immediately access Snowpark Python to process terabytes of customer transaction data without moving it out of Snowflake, apply Snowflake ML Python for building models that scale with their data warehouse, and create interactive Streamlit dashboards for business stakeholders.

12. ETL Environment (Available for Business tier)

Extract, transform, and load data pipelines with modern data processing frameworks and workflow orchestration tools. This environment provides essential tools for building robust, scalable data pipelines.

What’s included: PySpark, Dask, fsspec, MLflow, and comprehensive data pipeline tools.

Use case example: Rachel, a senior data engineer at a financial services firm, needs to process daily transaction data from multiple sources and load it into their data lake for analysis. Using the ETL environment, she can immediately leverage PySpark for distributed processing of large transaction files, and use MLflow for tracking data pipeline performance. The pre-configured distributed computing tools allow her to build robust, scalable data pipelines that handle peak transaction volumes without the months typically required for big data infrastructure setup.

13. Web Development Environment (Available for Business tier)

Modern Python web development with FastAPI, Django, Flask, and database integration tools. This environment provides tools for building full-stack data-driven applications.

What’s included: Flask, FastAPI, Django, SQLAlchemy, and modern web development tools.

Use case example: Tom, a full-stack developer at a fintech startup, needs to build a data-driven API that serves real-time financial analytics to their mobile app. Using the Web Development environment, he can immediately start building high-performance APIs with FastAPI, integrate with their PostgreSQL database using SQLAlchemy, and deploy secure endpoints that handle thousands of concurrent requests. The pre-configured web framework eliminates the typical dependency conflicts between different Python web libraries, allowing him to focus on business logic rather than infrastructure compatibility.

14. DevOps Environment (Available for Business tier)

Infrastructure automation and deployment tools for modern DevOps workflows and cloud operations. This environment provides tools for automating software delivery pipelines.

What’s included: Boto3, Kubernetes, Jenkins, Docker SDK, and infrastructure automation tools.

Use case example: Kevin, a DevOps engineer at a cloud-native company, needs to automate the deployment of machine learning models across multiple Kubernetes clusters. Using the DevOps environment, he can immediately script AWS infrastructure provisioning with Boto3, manage Kubernetes deployments using the Python client, and orchestrate CI/CD pipelines through Jenkins APIs. The integrated automation tools allow him to build sophisticated deployment pipelines that scale ML models from development to production without the weeks typically required for DevOps toolchain integration.

Benefits for Individual Practitioners

The advantages for individual data scientists and developers include:

- Productivity Boost: Start working on actual problems faster, rather than fighting with environment setup

- Reduced Frustration: Reduce common dependency conflicts and version compatibility issues

- Best Practices Built-In: Benefit from pre-selected packages and configurations

- Focus on Core Work: Spend more time on analysis and model development, not infrastructure

- Industry Specialization: Access domain-specific tools selected for each field

Benefits to an Organization

Beyond individual productivity gains, organizations realize several strategic advantages:

- Standardization: Enable all team members work in consistent environments

- Onboarding Efficiency: New team members can get started quickly

- Reduced Support Overhead: Fewer environment-related support tickets for IT teams

- Foster Collaboration: Simplified workstreams when environments are consistent across teams

- Industry Considerations: Pre-configured environments with industry-specific needs in mind

Part of the Anaconda AI Platform

Ready to reduce environment headaches and focus on your actual data science work? Our now expanded collection of 14 Quick Start Environments represents just the beginning. We will continue to gather user feedback and monitor industry trends to identify new specialized environments that would benefit our community. Each environment is regularly updated to include the latest stable versions of key packages and emerging tools. Create an account or log in to the Anaconda AI Platform and start using our new Quick Start Environments today!

Quick Start Environments are just one capability of the Anaconda AI Platform, which combines trusted distribution, simplified workflows, real-time insights, and governance controls that accelerate AI development with open source. Want to see a full demo of the platform or upgrade your account to gain access to all our Quick Start Environments? Reach out to our sales team.