AI is moving fast, but enterprise adoption often gets stuck in the same old bottlenecks: dependency hell, ungoverned open-source packages, and compliance risks that slow down innovation. For many organizations, deploying secure, reproducible, and scalable AI models in production is still a major challenge.

With billions invested in AI platforms, organizations can’t afford to let governance and reproducibility issues stall progress. That’s why the recent native integration between Anaconda and Databricks is such a game-changer: it brings the best of open-source Python and enterprise-grade security into one seamless experience.

In a pivotal move for enterprise AI development, Anaconda, the leader in advancing AI with open source for its 50+ million users, is proud to announce a strategic partnership and native integration with Databricks, the data and AI company known for its industry-leading data intelligence platform.

This collaboration merges Anaconda’s secure, curated open-source Python packages with the scale, performance, and governance of Databricks’ unified analytics and machine learning platform. It represents a new standard for enterprise-ready AI development—reducing friction, accelerating time-to-value, and ensuring compliance at scale.

This partnership marks the first time that Anaconda’s enterprise-grade Python ecosystem is available natively within Databricks Runtime, making it easier than ever for joint customers to develop, deploy, and scale AI/ML solutions with confidence.

Over the past decade, open-source Python libraries have become the de facto standard for data science, machine learning, and now generative AI. Yet, even as they’ve enabled massive experimentation and innovation, they’ve also created new risks around dependency management, vulnerability exposure, and inconsistent reproducibility between development and production environments.

In parallel, Databricks has emerged as the leading data and AI platform for unifying data engineering, analytics, and ML operations. But until now, customers looking to use open-source packages at scale have had to navigate complex setup processes, security tradeoffs, and a lack of native conda support in runtime environments.

That’s where Anaconda comes in. As the only unified AI platform for open source, and with a history of curating secure Python libraries, Anaconda helps bridge the gap between community innovation and enterprise trust. The new partnership and technical integration with Databricks eliminates the guesswork, friction, and risk for teams looking to accelerate their AI initiatives.

Organizations building AI systems at scale need more than experimentation environments—they need stability, consistency, and control. They need to know that when a model moves from one engineer’s laptop to a distributed production pipeline, the exact same dependencies and behavior will follow. That’s only possible with integrated, governed solutions that blend flexibility with discipline. This is exactly what enterprises need: a solution that delivers peace of mind with packages that simply work, unburdening teams from the constant cycle of reinventing the basics.

Meanwhile, modern enterprises are also under pressure to ensure their AI practices align with security and regulatory policies. Unmanaged packages from random repositories aren’t just a nuisance—they’re a liability. These real-world needs are what inspired the collaboration between Anaconda and Databricks.

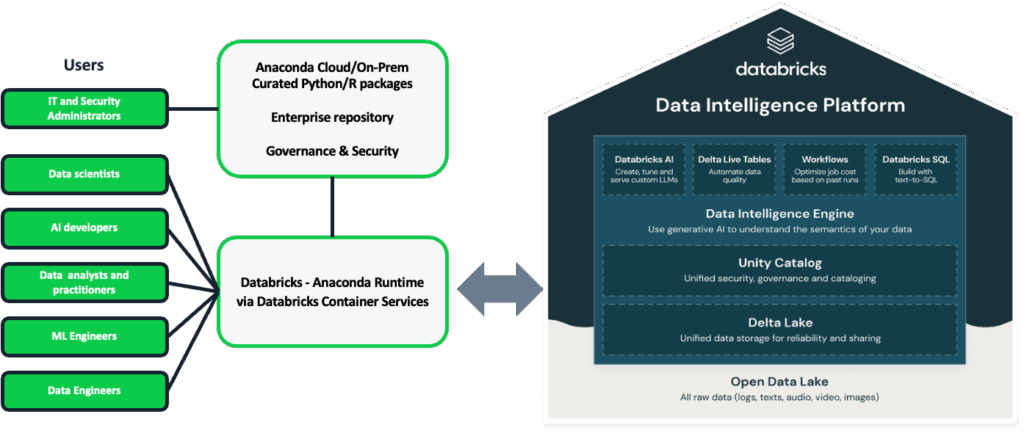

As a result of the partnership, Anaconda’s enterprise Python package distribution is available for integration within the Databricks environment, enabling AI developers, data scientists, and engineers to accelerate development with trusted, enterprise-grade open-source tools. This integration gives Databricks users direct access to Anaconda’s curated repository of packages—removing the friction of managing dependencies and version conflicts. With a simple configuration, teams can now use reliable, secure packages that are continuously maintained and supported by Anaconda, ensuring consistency across local and cloud-based workflows.

This partnership means faster data science, machine learning and AI development, smoother collaboration, and better governance over open-source usage. Enterprises benefit from Anaconda’s robust security tooling—such as hand-curated vulnerability metadata, vulnerability and license filtering, and environment tracking/management —while leveraging Databricks’ scalable compute and collaborative workspace. For joint Anaconda and Databricks customers, this creates a powerful combination of scalable analytics and responsible open-source management—accelerating innovation while maintaining compliance, security, and peace of mind.

Problem: Governance, Friction, and Fragmentation in Open-Source AI

AI developers working in enterprise environments face several recurring issues:

- Manual installation of packages across different runtime environments, leading to drift and errors.

- Lack of reproducibility when transitioning models from local dev to cloud-hosted environments.

- Security vulnerabilities tied to unmanaged or outdated packages.

- Limited visibility into open-source license risks or CVE exposure.

For organizations in highly regulated industries like financial services, healthcare, and government, these issues can become blockers to AI deployment. Even for fast-moving teams, the time spent debugging dependency issues adds costly delays.

These challenges are particularly problematic in cross-functional teams where developers, data scientists, ML engineers, and security professionals must collaborate across environments. Without a unified source of truth for package versions and dependencies, teams risk wasting valuable time reconciling conflicts and explaining inconsistencies.

The Solution: Native Anaconda Integration in Databricks Runtime

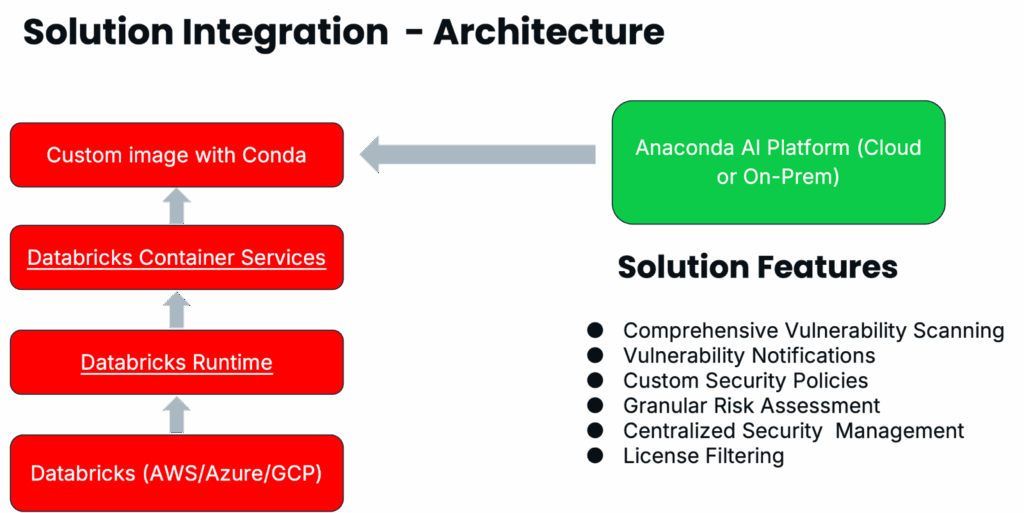

The joint solution leverages the flexibility of Databricks Container Services and the extensibility of the Databricks Runtime to support custom container images that include Anaconda’s Python packages via conda.

This is supported by Anaconda’s AI Platform, which can be deployed either in the cloud or on-premises to meet enterprise security and compliance requirements.

The new integration delivers Anaconda’s curated package repository directly within Databricks Runtime across AWS, Azure, and GCP. Here’s how it works:

- Native Conda Access: Databricks users now get seamless access to trusted, validated Python packages maintained by Anaconda, without manual installation.

- Custom Images with Conda: Teams can configure custom container images preloaded with conda environments, accelerating setup and onboarding.

- Private Package Security Mirror (PSM): Enterprises can optionally deploy Anaconda’s PSM on-prem or in the cloud to enforce security policies, scan for vulnerabilities, and ensure compliance with open-source usage.

These capabilities provide a single solution that meets the needs of security, compliance, and AI productivity. By embedding the curated Python stack into Databricks’ compute infrastructure, enterprises no longer need to choose between agility and control.

The solution runs through the Databricks Container Services and Runtime layers. Here’s a simplified flow:

- Platform Layer: Customers deploy Databricks on AWS, Azure, or GCP.

- Runtime Layer: A Databricks Runtime environment is instantiated, ready to run notebooks, pipelines, or model training jobs.

- Container Services Layer: Custom container images configured with Conda environments are loaded into the runtime.

- Package Management Layer: These containers are integrated with the Anaconda AI Platform (cloud or on-prem), delivering access to trusted open-source packages.

With CVE scanning, license filtering, and security policy enforcement built into the pipeline, teams can build ML models with confidence from start to scale. This also lays the groundwork for enhanced auditing, traceability, and reproducibility across model lifecycles.

Use Case: Secure AI at Scale

Imagine a financial services company building fraud detection models across multiple regions. Before this integration, the data science team had to:

- Manually create and distribute conda environments.

- Track package versions across local machines and the cloud.

- Manually monitor packages for CVEs.

Now, with the Anaconda integration:

- Data scientists use pre-approved environments provisioned within Databricks.

- Security teams get automated vulnerability scanning and license filtering.

- Workflows move faster from experimentation to production without compliance blockers.

These benefits extend to regulated research environments in life sciences, retail analytics workflows, and predictive maintenance solutions in manufacturing. Anywhere AI meets production requirements, this integration unlocks safer and faster deployment.

Impact and Results

The results of the Anaconda-Databricks integration are already clear:

- Accelerated AI development: Teams eliminate days or weeks spent managing dependencies.

- Improved reproducibility: Model training and deployment environments stay in sync.

- Reduced risk: CVE scanning and policy enforcement deliver peace of mind.

- Higher productivity: Data scientists focus on model building, not package troubleshooting.

- Cross-platform consistency: Teams can develop locally, test in staging, and deploy in production with confidence in package integrity.

Additionally, security and governance teams benefit from centralized controls and audit trails. Licensing risks are mitigated, CVEs are flagged early, and dependency transparency supports internal policy enforcement.

From a business standpoint, this drives:

- Faster model time-to-market.

- Fewer deployment delays.

- Lower remediation and compliance costs.

3 Ways Customers Benefit from Anaconda + Databricks

- Faster Onboarding: Get new users up and running in Databricks with pre-configured Anaconda environments that eliminate dependency issues.

- Built-in Security and Compliance: Gain peace of mind with curated open-source packages, vulnerability scanning, and license management built directly into your workflows.

- Seamless Model Lifecycle Management: Move AI/ML models from development to production without worrying about reproducibility or environment mismatches. to identify co-sell opportunities and position the joint solution to solve OSS governance blockers.

Broader Impact

The Anaconda-Databricks partnership reflects a broader movement in enterprise AI toward secure, scalable, and governable development environments.

It addresses one of the most fundamental tensions in the industry: how to combine the speed of open-source experimentation with the rigor of enterprise requirements.

By embedding Anaconda directly within Databricks, the two companies are redefining what secure open-source usage can look like at scale. It also signals a deeper alignment with the core needs of data leaders: governance, reproducibility, velocity, and trust.

This partnership also aligns with key trends such as:

- The rise of composable MLOps platforms.

- Increasing demand for open-source transparency.

- Greater emphasis on responsible and ethical AI deployment.

For open-source contributors, this partnership represents an important step toward ensuring their work is used responsibly and sustainably in production systems.

For IT leaders, it provides a bridge between innovation and control—enabling them to unlock the full power of open-source without compromising enterprise standards.

Final Thoughts: A Foundation for Trustworthy AI

As the enterprise AI landscape matures, success depends not just on model performance—but on the trust, reproducibility, and compliance of the entire development pipeline.

With this native integration and co-sell partnership, Anaconda and Databricks are setting a new bar for responsible AI innovation.

Together, we’re empowering data teams to build faster, deploy smarter, and govern better—so enterprises can realize the true potential of artificial intelligence.

Whether you’re a data scientist, MLOps engineer, or enterprise architect, the Anaconda + Databricks integration gives you the tools to build and scale AI with confidence.

By eliminating friction, improving reproducibility, and automating governance, this solution helps you spend less time on infrastructure and more time solving real-world problems with AI.

With secure, native open-source access in Databricks, the future of responsible, enterprise-ready AI is here.

Ready to take control of your AI workflows?

Explore the Anaconda + Databricks integration today or talk to Anaconda Professional Services to get started with your secure open-source strategy.

Reach out to your Databricks account team or Anaconda partner lead to activate co-sell resources, demo environments, and customer-ready deployment guides.

Michael Hannigan is the Manager of Sales Engineering at Anaconda, where he works at the intersection of open-source software, enterprise AI, and secure development workflows. With over a decade of experience in data science and platform integration, he’s passionate about helping teams scale AI responsibly and reproducibly.

Additional Information

- Links to User documentation: