Pyston Team Joins Anaconda to Expand Open-Source Project Development

This post was co-authored by Anthony DiPietro, Software Engineer, Anaconda and Kirill Petrov, Machine Learning Engineer, Intel.

Anaconda and Intel are collaborating to build key open-source data science packages optimized for Intel hardware to make machine learning fast and scalable for practitioners everywhere.

To deliver the best possible user experience for data science practitioners, popular data science packages and libraries optimized for Intel® oneAPI Math Kernel Library (oneMKL), including NumPy and SciPy, are now easily accessible in the defaults channel of the Anaconda repository. As most of you might know, these packages are at the heart of scientific computing in the Python ecosystem. Built on top of these libraries is the machine learning module Scikit-learn, a staple for data science practitioners.

When it comes to machine learning, better performance can be the difference between models getting into production or not. Through our collaboration with Intel, enterprises can now leverage open-source innovation on powerful hardware, resulting in accelerated time to value for the business.

Intel® Extension for Scikit-learn provides drop-in replacement patching functionality for a seamless way to speed up your Scikit-learn application. We achieve this through the use of the Intel® oneAPI Data Analytics Library (oneDAL) and its convenient Python API daal4py powering the extension underneath.

Underneath Intel Extension for Scikit-learn, the user-accessible daal4py also provides highly configurable machine learning kernels, some of which support streaming input data and can scale out to clusters of workstations easily and efficiently. Also, it provides model converters for XGBoost and LightGBM to run fast inference without loss of accuracy. Internally, it uses oneDAL to deliver the best performance.

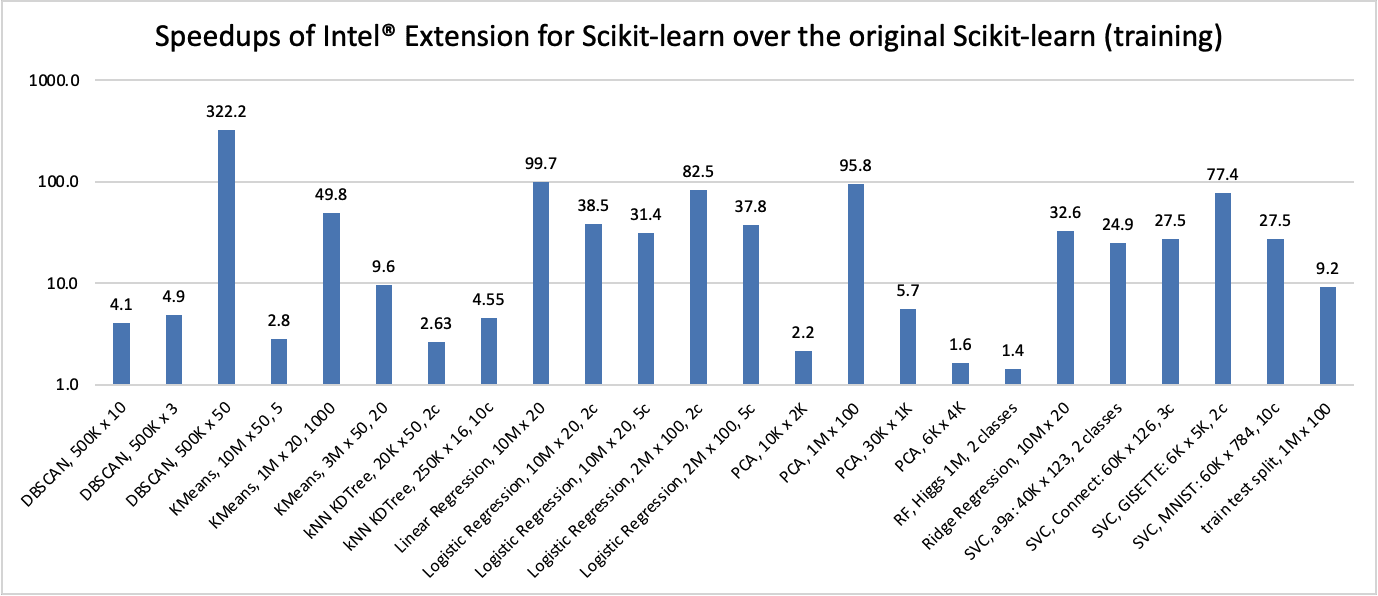

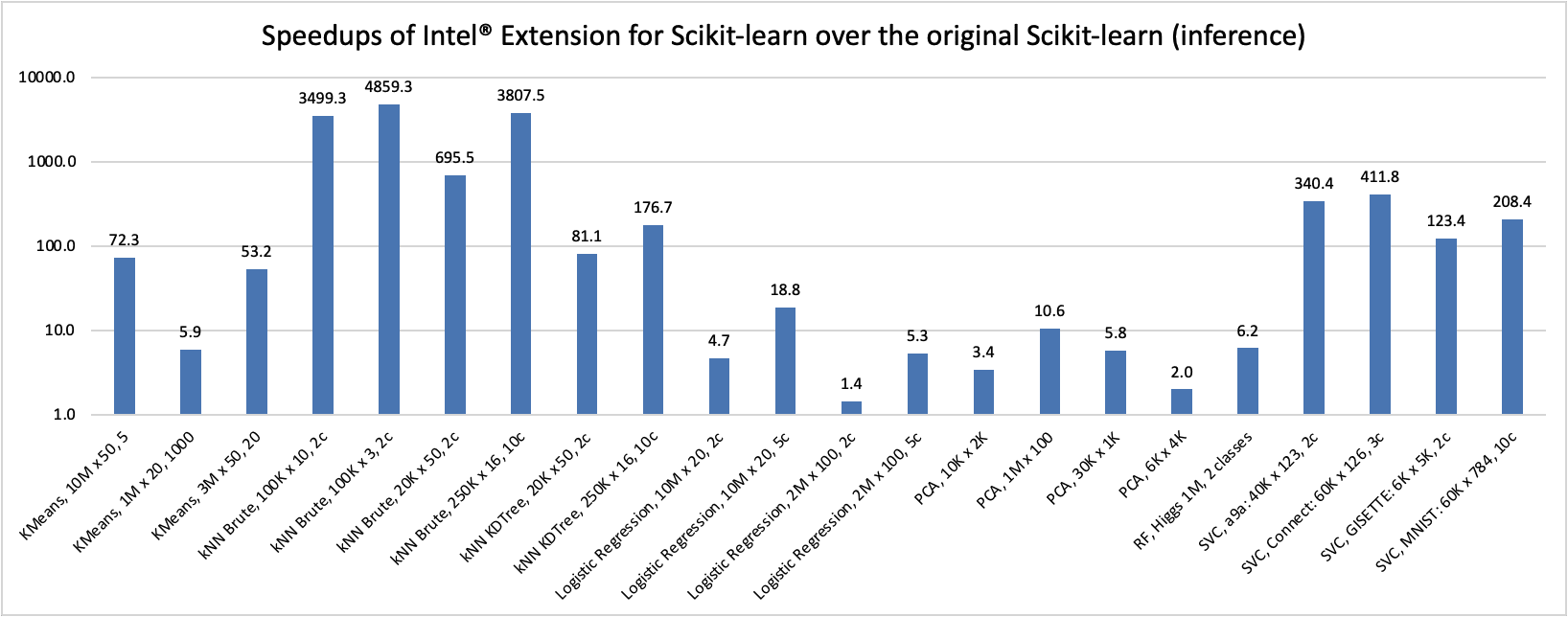

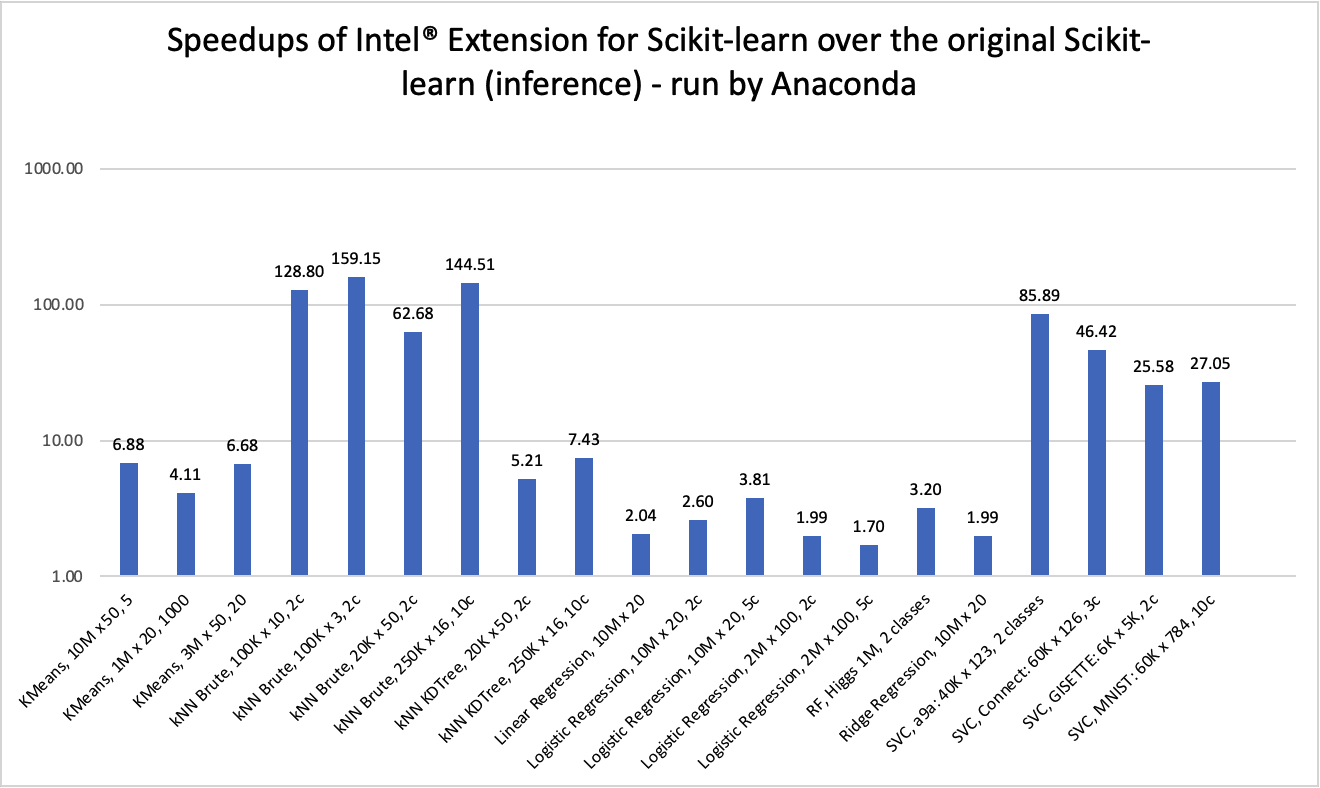

To see how much time and money we save using the Intel® Extension for Scikit-learn, we should look at the timing benchmarks between the patched Scikit-learn and the original Scikit-learn. Note: Intel® Extension for Scikit-learn* currently supports optimizations for the last four versions of scikit-learn. The latest release of scikit-learn-intelex-2021.2.X supports scikit-learn 0.21.X, 0.22.X, 0.23.X and 0.24.X.

These tests were performed on a c5.24xlarge AWS EC2 Instance using an Intel Xeon Platinum 8275CL with 2 sockets and 24 cores per socket and with Scikit-learn version 0.24.2, scikit-learn-intelex version 2021.2.3, and Python 3.8, running Ubuntu 18.04.5 LTS with 192 GB of memory. We can see how the times of both the training and prediction stages of common machine learning algorithms are improved significantly. Those numbers are the factor of times faster the Intel® Extension for Scikit-learn speeds up the time.

To give an example, let’s look at using the training time of logistic regression with 10 million samples with a class size of 2 and 20 features / columns. The original Scikit-learn took 90.44 seconds to run, the Intel® Extension for Scikit-learn, with the same performance, took 2.35 seconds. To get the factor in time difference, simply divide 90.44 / 2.35 = 38.5 times faster!

To reproduce the numbers, you can run the following commands using scikit-learn_bench:

Intel® Extension for Scikit-learn enabled:

python runner.py –configs configs/blogs/skl_conda_config.json –output-file result.json –report

The original Scikit-learn:

python runner.py –configs configs/blogs/skl_conda_config.json –output-file result.json –report –no-intel-optimized

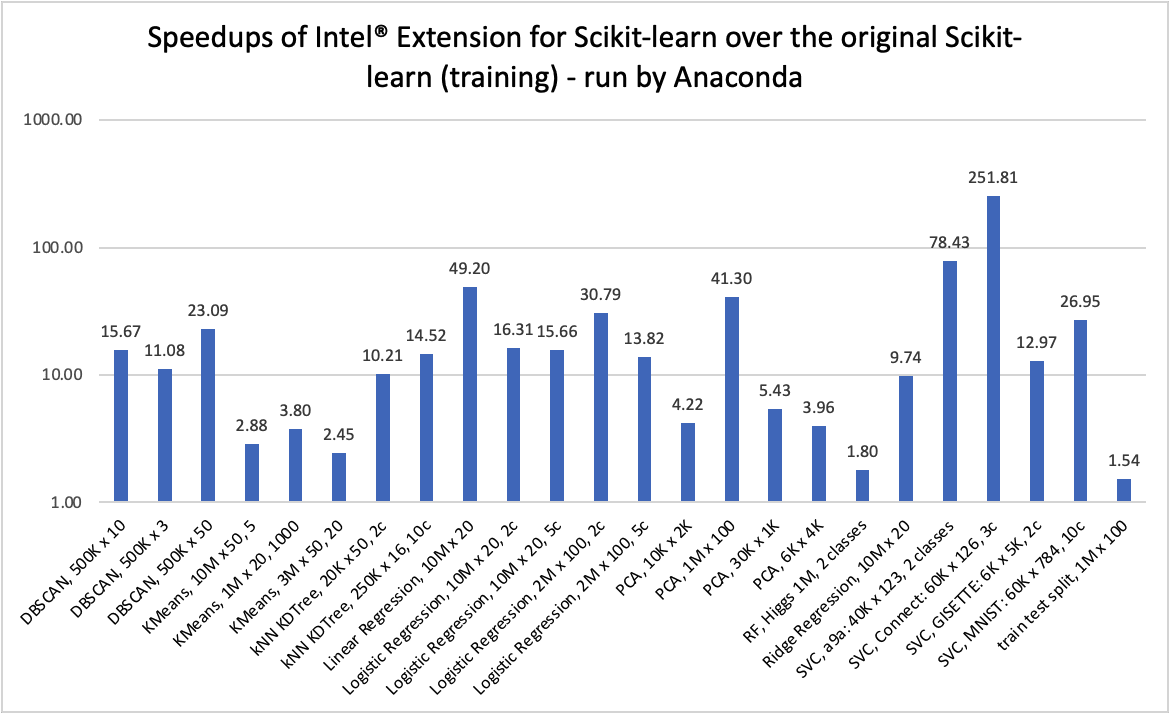

Here at Anaconda, we wanted to look at these benchmarks on our hardware as well. It is not the latest optimal machine, however, running those same tests on a modest system yielded some impressive performance results. The following results are from an Intel® Core™ i7 10710U with 1 socket and 6 cores per socket and using Scikit-learn version 0.24.2, scikit-learn-intelex version 2021.2.2, and Python 3.8 on Windows 10 Pro with 32 GB of memory.

While the range of cases covered varies in several ways, we saw that the Intel® Extension for Scikit-learn was, on average, 27 times faster in training and 36 times faster during inference. The data clearly show that unlocking significant performance savings on these workloads is just a conda install away.

Users can access the speed improvements of Intel® Extension for Scikit-learn and the underlying power of Intel® oneAPI Data Analytics Library directly from the defaults channel in the Anaconda repository. It will be regularly updated as the open-source community publishes new releases.

To install these Intel-optimized packages for scikit-learn on Windows, Mac, and Linux x86_64, simply:

conda install scikit-learn-intelex

Once installed, there are two ways in which you can enable the replacement patching functionality for scikit-learn. You can enable it when you run your application:

python -m sklearnex my_application.py

Or you can explicitly enable the patching in your code:

from sklearnex import patch_sklearn

patch_sklearn()

Download Miniconda, Anaconda Individual Edition¹, or Anaconda Commercial Edition to start using Intel-optimized packages today. Additionally, keep an eye out for further ways Intel and Anaconda are working together to speed up and scale out more aspects of your data science toolkit.

For more information, please visit our docs.

Intel Extension for Scikit-learn documentation

Intel Extension for Scikit-learn GitHub page

[1] Subject to Anaconda’s Terms of Service

Notices & Disclaimers

Performance varies by use, configuration and other factors. Performance results are based on Anaconda testing as of dates shown in configurations and may not reflect all publicly available updates. Past performance doesn’t necessarily indicate future results. Results may vary.

Talk to one of our financial services and banking industry experts to find solutions for your AI journey.